Supercomputing Gets More Personal: Modular, Flexible, Agile

The Reasons High-Performance Computing (HPC) is for Digital Transformation

Guest blog by Earl Dodd, Global Technical Solutions Architect for HPC and Supercomputing, World Wide Technology

I’ve been around long enough to remember when high-performance computing (HPC) was something only scientists, researchers, and certain government agencies cared about. While some of us were enjoying the relative peace and stability of the late 1980s, or (gasp) were not even born yet, some of the world’s most influential organizations were developing technology that has fueled decades of scientific progress. Now couple our ability to collect more data, the need for more computational horsepower to analyze that data grows.

This post explores why HPC is leveraged as best practices for digital transformation initiatives. Companies and governments need to transform, or they will be left behind in this digital world. Data is critical to any initiative. Digital transformation requires that you search for this data so you can put it to use. Intelligent capture, digestion, and data processing technologies using HPC and AI systems underpin the ability to advance processes from mere digitization to real transformation. I call this “fusion computing.”

This post explores why HPC is leveraged as best practices for digital transformation initiatives. Companies and governments need to transform, or they will be left behind in this digital world. Data is critical to any initiative. Digital transformation requires that you search for this data so you can put it to use. Intelligent capture, digestion, and data processing technologies using HPC and AI systems underpin the ability to advance processes from mere digitization to real transformation. I call this “fusion computing.”

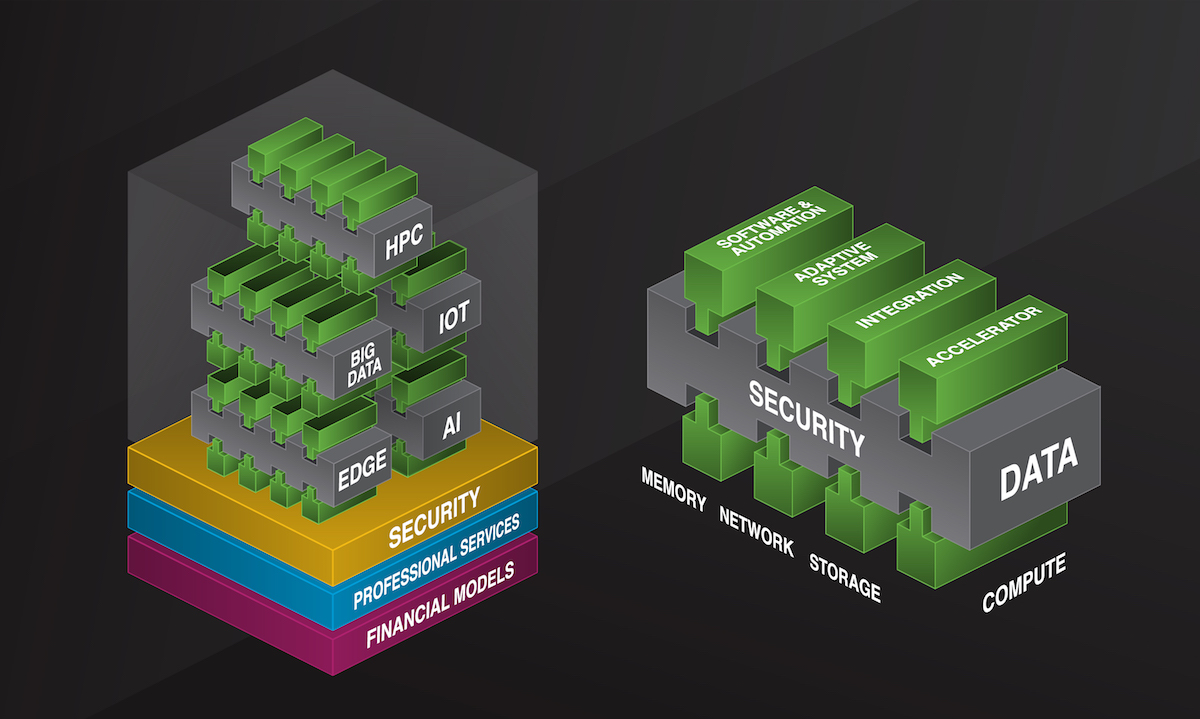

An HPC + AI + Big Data architecture is the foundation of the fusion computing services framework. This framework represents a convergence of the HPC and the data-driven AI communities, as they are arguably running similar data- and compute-intensive workflows. Just as data security and business models like the cloud (public, private, and hybrid) have evolved, the high-performance architecture for HPC + AI + Big Data has evolved to meet the agile demands of business and governments.

The important fusion computing takeaways are:

- an open high-performance architecture drives agility, faster innovation, and just-in-time procurement;

- data is the new currency and can be shared more easily with mainstream workflows;

- the framework provides a fully orchestrated, extensible, and traceable experience; and

- the HPC cloud enables “as a service” economy for business and government.

How HPC has evolved

Remember when the mainframe was called the engine of progress? HPC has evolved over the years, having its roots in the design and operation of the mainframe. That evolution has moved through many phases: 1) 1970s time-sharing mainframe, 2) 1980s distributed computing, 3) 1990s parallel computing, 4) centralized demand for HPC, 5) centralized HPC with data security, and 6) the public, private, and hybrid HPC cloud. As author Stephen King said, “Sooner or later, everything old is new again.” And this holds with HPC and supercomputing.

In the last several decades, the HPC landscape has seen a continuous appearing and disappearing of manufacturers, systems, and architectures. This rapid change is expected to continue, if not accelerate. What is clear, though, is that the need for data and computation remains.

What your business needs to know about HPC today

High-performance computing is what enables the ability to process data and perform complex calculations at high speeds in dense packaging. HPC makes use of parallel processing for running advanced application programs efficiently, reliably, and quickly. It has enabled advances in science, engineering, industry, economics, finance, society, health, defense, and security.

Today HPC is used in industry to improve products, reduce production costs, and decrease the time it takes to develop new products. As our ability to collect big data increases, the need to be able to analyze the data also increases — this is an area for which HPC can be a most useful tool.

Historically, supercomputers and clusters are specifically designed to support HPC applications that are developed to solve “Grand Challenges” — big problems in science and engineering. The general profile of HPC applications is constituted by an extensive collection of compute-intensive tasks that need to be processed in a short period. It is common to have parallel and tightly coupled tasks, which require low-latency interconnection networks to minimize the data exchange time. The metrics to evaluate HPC systems are floating-point operations per second (FLOPS) — now TeraFLOPS, or even PetaFLOPS — which identifies the number of floating-point operations per second that a computing system can perform.

For an organization, data are coming at much faster rates than anyone had expected. Whether it’s from the Internet of Things (IoT), webpages, commercial transactions, or other sources, the amount of data pouring into enterprise data centers exceeds current storage capacity. This flood of data creates a new class of data consolidation, data handling, and data management challenges. Organizations can’t just let the data pile up (i.e., the store-and-ignore tactic seen in data warehouses). They now need to make deliberate decisions about what data to store and where it needs to be moved, what data to analyze for which purposes, and what data to set aside or archive for potential future value.

Data is currency

In the past, vast amounts of business data were stored and ignored. Now, businesses use data in the same way scientists and researchers do: its analysis fuels innovation, competitiveness, and business success.

Many organizations now need IT solutions that couple high-performance computing with data analytics. This convergence is driving a shift to high-performance data analytics. New applications will emerge not only in science and engineering, but also in commerce — in areas such as information retrieval, decision support, financial analysis, retail surveillance, or data mining.

Data-intensive algorithms operate on vast databases containing massive amounts of information (e.g., about customers or stock prices) that have been collected over many years and are now no longer being ignored. In particular, the rapid future increase in the amount of data stored worldwide will make HPC indispensable.

Running many applications that require high-performance architecture produces a large amount of data to be analyzed and presented. To enable the management of such vast, diverse data, specific tools, policies, libraries, and concepts have been developed to aid the visualization of output data from a supercomputer. Presenting output data from modeling and simulation in a visually understandable way may even require supercomputing resources. There is a constant theme of convergence among HPC, AI/ML/DL, and big data at scale requiring a new, agile, open high-performance architecture and the fusion computing services framework.

Business is in the game of science

As the US Department of Energy (DOE) proclaimed back in 2018, “the future is in supercomputers.” The new systems coming online at the DOE in a few years are going to be some of the world’s fastest supercomputers on Earth. In medicine and health, in discovery and innovation, we can build new systems that can genuinely improve the wellbeing and security of humanity.

A philosophy to deliver and communicate applied science to the public in times of extreme change or crisis is a business imperative — as is the capability to do so. The role that business plays in leveraging science’s knowledge can guide the effectiveness of public sector decision-making. HPC and AI have become a archetypal framework for data-driven decision making.

The National Academies of Sciences Engineering and Medicine, a private, nonprofit, nonpolitical professional organization, has defined its role to help business with the gameplay of applied science. Their key takeaways:

- A democratic society depends on science to deliver us from health, social, environment, and economic crises.

- For the foreseeable future, policymakers and communities will struggle to make decisions now that position them well for an uncertain future.

- Public and private sectors have a role in helping to identify significant opportunities for irreplaceable science, and in integrating the results where appropriate into actionable and strategic science.

The open high-performance architecture and fusion computing are supporting businesses and governments in digital agility and innovation to act as the data-driven yin and yang of success for today’s modern organization — both can be applied in an efficient and motivational way.

The hybrid cloud model brings additional game-changing capability and capacity (complementary and interconnected) resources on- and off-premise because both are necessary to achieve an integrated, wholly secure high-performance environment at scale. Fusion computing is ideally suited to meet the most demanding requirements of security, cloud, and performance.

Computing will continue to get more powerful and personal

These supercomputing machines, over time, will become smaller in size, requiring less physical infrastructure to cool them, and would have a much more reduced energy footprint than current systems.

The way to achieve these advances requires going back to first principles of design and engineering. As with brain science, hierarchical modularity, flexibility, and agility provide the roadmap to supercomputer design success. The roadmap for disaggregation and composability are paramount today.

Personalization is the tailoring of a service or product to accommodate an individual’s or business’s requirements, and is now widely regarded as a global megatrend. Now digital technology has enabled supercomputing manufacturers and skilled VARs and systems integrators (SIs) to provide the personalization and flexibility of custom-made manufacturing, but on a large scale.

With the advent of composability based on open standards applied to every level of public and private cloud and infrastructure as a service (IaaS), massive supercomputing performance is possible at your fingertips to deploy and execute business workflows. A personalized supercomputing solution can zero-in on your business challenges, research goals, use-cases, and data-driven opportunities.

A blended, scalable and secure computing paradigm

These trends and advances lead us to a blended, scalable, secure computing paradigm: the convergence of HPC, AI, and big data. This convergence is not new and has been underway for years. What is new is the addition of edge computing and IoT/IIoT to this paradigm. The open high-performance architecture is the fundamental infrastructure building block for fusion computing.

Of course, for the global, agile, cloud-ready enterprise there can be challenges and frustrations with the ad hoc approaches applied to this emerging computing paradigm (e.g., HPC, Big Data/HPDA, AI/ML/DL, Edge, IoT/IIoT). World Wide Technology (WWT) is unifying the complex world of computing among different, growing, and often competing needs with fusion computing. WWT has defined the new solution category of “fusion computing” that represents a unified architecture for the convergence of the ecosystems at the edge, the core, and the cloud while maintaining a consistent, security-first mindset.

WWT and its top partners have created a reference architecture that fundamentally addresses how competitive enterprises interact with technology, processes, business models, and people. Fusion computing is not just a product roadmap — it defines a balanced, open architecture, design, and operation of the enterprise’s overall computing, data, networking, application, and security ecosystem in a composable fashion. The high-performance architecture is built around self-managing characteristics of distributed computing resources, adapting to unpredictable changes while hiding intrinsic complexity to managers, operators, and users; with it, autonomic computing starts delivering on TCO.

The tools and technologies for fusion computing are maturing rapidly. Better still, HPC and big data platforms are converging in a manner that reduces the need to move data back and forth between HPC and storage environments. This convergence helps organizations avoid a great deal of overhead and latency that comes with disparate systems.

Today, organizations can choose from a rapidly growing range of tools and technologies like streaming analytics, graph analytics, and exploratory data analysis in open high-performance environments. Let’s take a brief look at these tools.

- Streaming analytics offers new algorithms and approaches to help organizations rapidly analyze high-bandwidth, high-throughput streaming data. These advances enable solutions for emerging graph patterns, data fusion and compression, and massive-scale network analysis.

- Graph analytics technologies enable graph modeling, visualization, and evaluation for understanding large, complex networks. Specific applications include semantic data analysis, big data visualization, data sets for graph analytics research, activity-based analytics, performance analysis of big graph data tools, and anti-evasive anomaly detection.

- Exploratory data analysis provides mechanisms to explore and analyze massive streaming data sources to gain new insights and inform decisions. Applications include exploratory graph analysis, geo-inspired parallel simulation, and cyber analytic data.

These are some of the countless advances made possible by the rise of technologies and solutions for high-performance data analytics.