Device-Managed Shingled Magnetic Recording (DMSMR) technology is different from Conventional Magnetic Recording (CMR), and can be implemented in various ways. I wanted to share an overview of our DMSMR architecture, and how we apply specific capabilities and configurations.

Logical Block Address Indirection

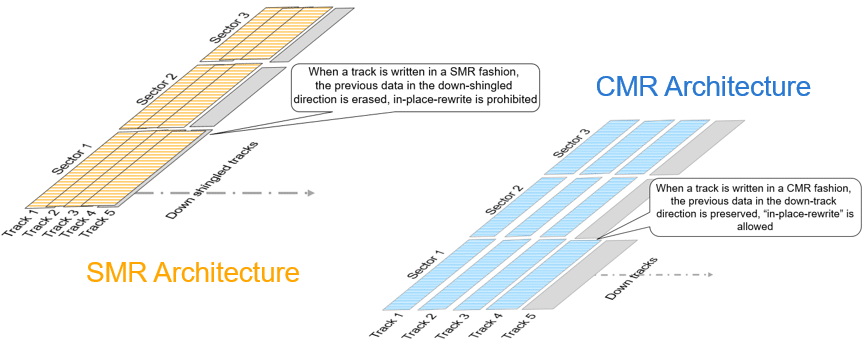

In CMR, each track is written separately from its adjacent neighboring tracks. In this approach, there is minimum interaction between tracks. Data sectors can be written, and re-written repeatedly. Furthermore, the LBA (Logical Block Address) location is absolute and immovable, after format.

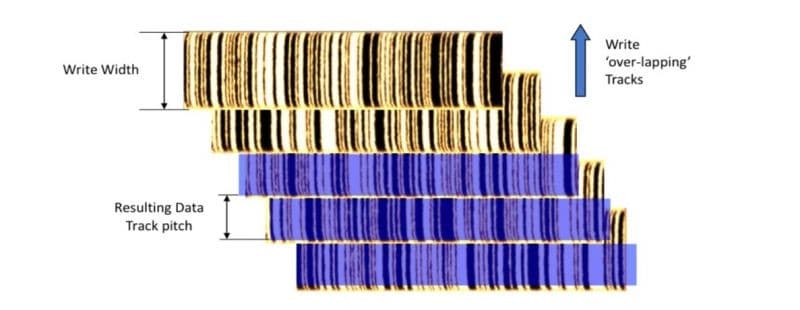

Conversely, in SMR drives, data tracks are laid down by overlapping the previous track (thus the name “Shingle”). In this fashion, tracks are packed much closer together, helping to increase areal density. However, using this architecture, data from the “down shingle” track will be erased when writing, thus making laying down new data to a previously written location impossible without destroying old data from the down shingle track. To accomplish the new write, the entire data segment (SMR Zone) needs to be re-written in order to preserve other data.

This is where things get interesting. In order to re-write the data, all of the data within the same data segment (SMR zone) is relocated elsewhere. In doing so, the absolute address of the data is remapped. This type of movement is called LBA Indirection. It governs how the device works with this underlying recording technology and how it can be tuned to be advantageous for different applications and workloads by orchestrating where, how, and when the data is to be placed.

Dynamic Data and Zones

Our approach to DMSMR is dynamic. We designate various SMR zone sizes for different purposes and applications. Using machine learning and pattern recognition in our system, the drive detects types of data so that we can coalesce similar data types together to maximize performance. For example, logs and metadata for indexing or journaling of small block writes will be placed somewhere more advantageous for garbage collection, while large block transfers will be placed into large zones.

Another example is designating large number of small zones for pure random I/O, as in the case of the NAS applications. Here we can either buffer the data for collation for more efficient flushing into final destination zones, or we can choose to leave the data there for permanent storage, thereby cutting down the need for more background activity.

The configuration of large and/or small shingle zones gives us the ability to keep data movement as a part of background activities, and only when necessary. This is only made possible by the LBA indirection nature of SMR architecture. The number of configurations is vast, the flexibility is greater still.

New Benefits That Emerged

This way of dealing with intelligent data placement has brought about a few interesting benefits:

Data integrity — Because DMSMR drives put data down sequentially, track ECC (accumulation of parity information on a per-track basis) can be placed at the end of each data track. It enables the drive to correct up to 8K bytes of data per track during read, with near on-the-fly performance. This exceptions-handling capability is especially beneficial in event of shock, fan vibration, grown defects or other less-than-ideal operating environments. This development of data protection power during read comes naturally in a SMR architecture.

Furthermore, there are techniques that SMR drives can deploy when running into command faults during write, in the same non-ideal operating environment: It can either place the data with larger track spacing when the ability to track-follow is less than perfect, or by swiftly rectifying the corrupted data due to off-track write and migrate them elsewhere. It can also seamlessly perform write abort recovery by rewriting the same data down on different tracks.

Throughput – The property of SMR architecture also allows us to coalesce the data in cache more freely, mainly due to, again, LBA Indirection. Performance can be good when a burst of random writes is sequentialized into a local zone. Nevertheless, this burst of performance does come at a cost: data needs to be migrated to improve near-sequential read performance. However, with appropriate amount of idle time, such as in a typical NAS environment, this benefit can be easily harvested in the right workload.

DMSMR Considerations

Data movement requires idle time — One of the most discussed about topics in DMSMR drives, is data movement. This is, by LBA Indirection nature, the other side of the same coin. To be able to move the data freely requires the drive to have idle time to perform these tasks in the background. Without the ability to preemptively maintain disk space, the drive, under specific conditions, may take longer to complete a command as its resources dwindle.

A simple analogy: Imagine the drive as a warehouse that organizes all your storage for you. As more cartons of various sizes begin to pile in, we need more time to reorganize them. The more we delay the work, the less open space we have to move it around and tidy it up. If we don’t allow for this time, the disorganization will lead to crammed space, low efficiency, and poor response time to locate the right carton.

Specified workloads — Our drives are built to work in specific environments. The firmware is tailored for a specific application use case and as such is designed with extremely varying zone sizes, buffering and flush policies of how data is committed.

We collect a lot of field data to deeply understand workloads, data usage, idle time, read/write ratio and other characterization so we can design firmware that is highly-optimized for specific segments (e.g. personal computing, NAS, etc.). As our drives are optimized for specific purposes, performance may be impacted if used for a purpose for which it was not designed.

A Paradigm Shift

DMSMR technology is relatively new, and it’s evolving as we continue to advance performance and other drive capabilities. While this type of purpose-built design is exciting in its flexibility, it naturally comes with a lot of questions. We plan to regularly provide technology insight and use case guidance.