Performance and price are all-too-obvious pitfalls when purchasing SSDs

How much are you willing to spend on SSDs to configure SSD cache on NAS or build all-flash storage arrays? Our statistics show that the average cost per gigabyte is 0.31 USD for Synology users who have installed SSDs in the high-end models (xs/xs+). This indicates that Synology users are price-sensitive, but in the meantime, they’re also pursuing a boost in performance.

Try to google “SSD performance” and you will get a bunch of results showing you how to interpret the performance figures from SSD specifications. They’ll tell you that 4K IOPS is the figure you should pay extra attention to, because the small 4K file random read/write performance can more accurately reflect the actual performance of the SSD.

If you read the performance figures on the spec sheet, you will find out the following two facts:

Sustained performance

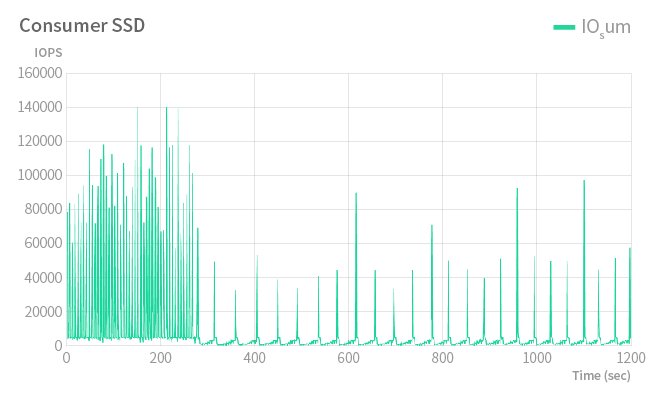

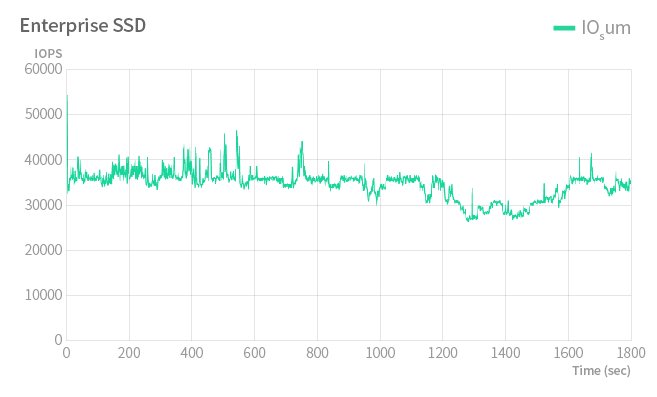

When you take a closer look at the detailed performance test, you may find that SSD performance changes over time. SSD vendors sugarcoat the performance figures by revealing only part of the whole picture.

For the consumer SSD, the IOPS figures start off on a strong note and peak at 140,000. Although the numbers remain rather high in its early stages (from 0 to around 300 seconds), they begin to drop dramatically not long after that. Subject to dramatic peaks and troughs, the numbers fluctuate like a roller-coaster ride. Standing in stark contrast to the consumer SSD, the enterprise SSD forms a more level curve and delivers more stable IOPS. Since the figures remain relatively flat without sharp rises and falls, the overall performance is more consistent and sustainable.

Endurance and over-provisioning

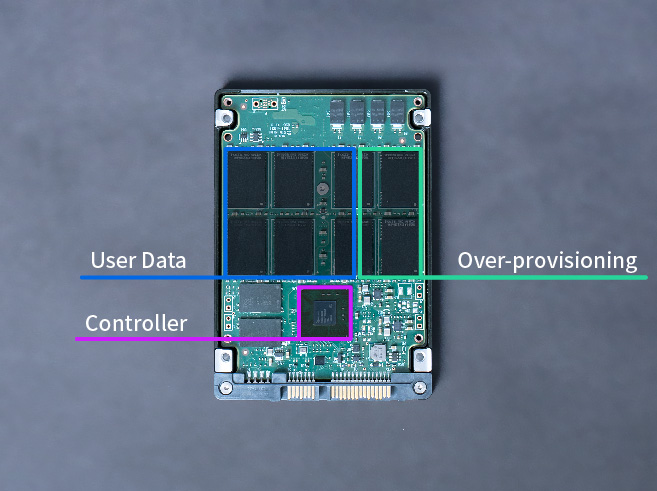

One of the key factors in enterprise SSDs delivering sustained performance is the proportion of the SSD capacity pre-configured for over-provisioning.

If you had the experience of installing a 1TB SSD, you must have noticed that there might be only 960GB available for formatting. Where did the rest of 64GB (6.2%) go? Did it just disappear for no reason?

An SSD reaches the end of its lifetime after a limited number of program-erase (P/E) cycles. Since overwrite command is unavailable in NAND flash, a NAND block needs to be erased before it can be rewritten. A block is the smallest unit of storage composed of multiple pages, which are the smallest unit that can be written. Repeated writes can result in the erosion of memory cells and shorten the lifespan of an SSD.

To reduce wear and prolong an SSD’s lifetime, techniques such as garbage collection or wear-leveling are often employed. Garbage collection is a process where valid pages are moved to free blocks while erasing blocks containing invalid pages. Wear-leveling is an algorithm that spreads out write/erase operations evenly among blocks by swapping frequently used blocks with free blocks to equalize block usage and prevent them from wearing out too soon. Both techniques require the allocation of extra space. Over-provisioning is the function that allows additional space by partitioning a portion of the total capacity so that the SSD controller can run these life-prolonging techniques more smoothly.

Tip: If the SSD cache in your NAS becomes slower, you may recreate the SSD cache with 65% of the storage capacity to get the sustained performance back.

The purpose of over-provisioning is to extend the lifetime of your SSD, so it is closely tied to SSD endurance. To pick the right SSD, you can also take into account Terabytes Written (TBW) or Drive Writes Per Day (DWPD). If you know the capacity and warranty period of your drive, then you can convert TBW to DWPD or the other way around with the following formula:

For example, suppose the TBW is rated as 1,400 for a 1.92TB SSD that comes with a 5-year warranty, then we could arrive at a figure of 1400 / (365 X 5 X 1.92) = 0.4 DWPD. It means that you can use 40% of the drive capacity per day, which equates to 768GB. To learn more about TBW and DWPD, you can refer to this article.

Power loss protection

In every SSD, there is a cache available for optimizing write operations. Write commands will accumulate in the cache first before write operations take place after reaching a certain number. Accidents happen. If you lose power when the data is still in the cache, then you might suffer from data loss. This is where a capacitor buffer comes in. Most enterprise SSDs are equipped with capacitors or small batteries to make sure data can still be written to the SSD in the event of sudden power failures. When voltage drops occur, the SSD controller will draw power from the capacitor to allow enough time to finish the existing writing, thereby reducing the risk of data corruption.

With the SSD technology maturing and becoming more mainstream, SSDs in the market come in different form factors, functions, capacities, etc. It is essential that you select the right SSD for your business workloads. However, you may suffer from hidden costs if price and performance are the only factors in your purchasing decisions. Take sustained performance, SSD endurance, and power loss protection into consideration, and hopefully you’ll find an SSD that best suits your needs.

Rather than drawing a bright line between an enterprise and consumer SSD, Seagate blurred the lines between the two and opened up new possibilities by launching the Ironwolf SSD designed especially for NAS. Read the review (http://sy.to/ssdreview) by our Community moderator and see how you may benefit from it.