Generative AI (GenAI) tools are revolutionizing businesses operations, offering the ability to rapidly boost efficiency and solve complex problems. However, this exciting potential comes with a significant challenge: data privacy.

In March, a Korean conglomerate lifted a ban on the use of GenAI, only to reinstate it weeks later after employees shared sensitive internal information, including proprietary code and a meeting recording. This incident highlights that while organizations aim to harness AI to increase productivity, they must simultaneously manage and control data leakage risks.

To prevent situations like the one mentioned while leveraging advanced AI technologies, Synology has implemented comprehensive de-identification techniques and meticulous guardrails within our workflow. This ensures responsible handling of customer information and upholds high standards of data security and privacy.

Performing de-identification within a GDPR-compliant environment

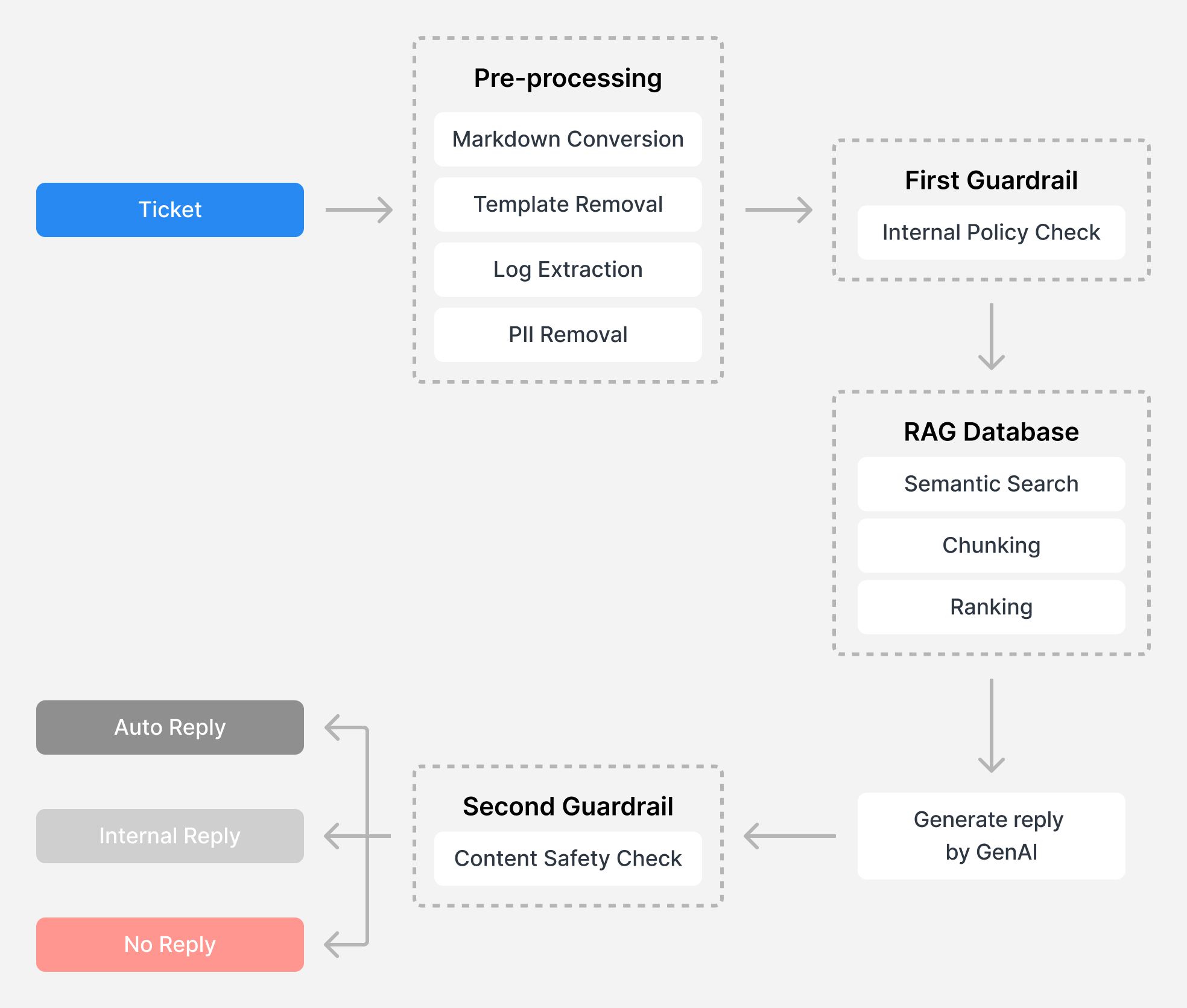

Synology has developed a Retrieval-Augmented Generation (RAG) system to enhance our technical support efficiency and accuracy. We have also built a database of accredited support cases from the past year, which provides up-to-date insights specific to Synology products and solutions approved by professional technical support engineers.

When a new request is received, the RAG system analyzes the customer’s question and retrieves relevant resolutions from the database, resulting in higher quality responses compared to those generated by GenAI trained on public data.

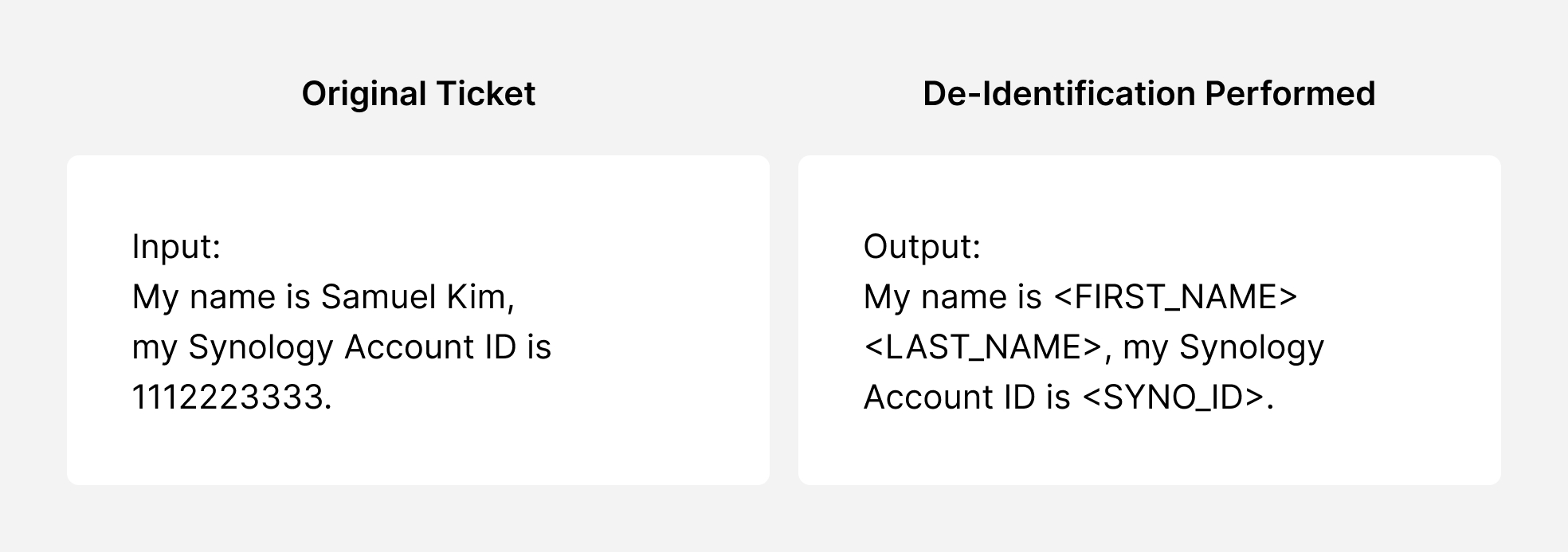

This system is built on a foundation of protecting customer privacy with a comprehensive de-identification mechanism that ensures all data from past cases and newly received tickets is anonymized before being used:

Regex Identification: Regular Expressions (Regex) identify patterns like emails and phone numbers on the support tickets.

Named Entity Recognition (NER): Deployed with natural language processing to detect entities by understanding their context.

Checksum Validation: Ensures the accuracy of these patterns.

Context Analysis: Analyzes surrounding text to increase detection confidence.

Anonymization Techniques: Secure the detected sensitive information.

Most importantly, this comprehensive de-identification process is performed within a GDPR-compliant environment, ensuring complete regulatory compliance and data anonymization.

Prevent harmful, biased, or otherwise undesirable outputs with guardrails

After re-processing for de-identification, all AI-generated responses go through two guardrails in the system for policy checks to prevent any inadvertent disclosure of sensitive information or potentially harmful advice.

Internal Policy Check: The first guardrail checks for internal policy violations or any data loss risks for users. For instance, if a ticket requests installation files, DSM or application versions that could impact users’ existing environments, assistance with common vulnerabilities and exposures (CVE) issues, or references other support tickets, the system stops responding and provides a summary of the main factors guiding the decision to the technical support engineer for potential escalation.

Content Safety Check: The second guardrail ensures the generated responses do not provide sensitive information such as console commands, remote access details, or other contextually accurate data that may be unavailable or inappropriate in certain scenarios. After passing this guardrail, the system will ultimately decide whether to reply automatically or pass the ticket to support staff for review.

Conclusion

This automated AI-powered support workflow has significantly enhanced response accuracy and relevance, improving our response times by twenty times. By implementing rigorous de-identification processes and robust guardrails, we ensure data confidentiality and adhere to strict privacy protocols.

Through our experience developing the AI-powered customer support system, we have fully realized that while AI has the powerful problem-solving capabilities, it must be thoroughly constrained with control and review mechanisms to ensure a balance of efficiency and privacy. Moving forward, Synology will keep its privacy-first commitments and leverage AI’s potential while safeguarding customers’ valuable data.