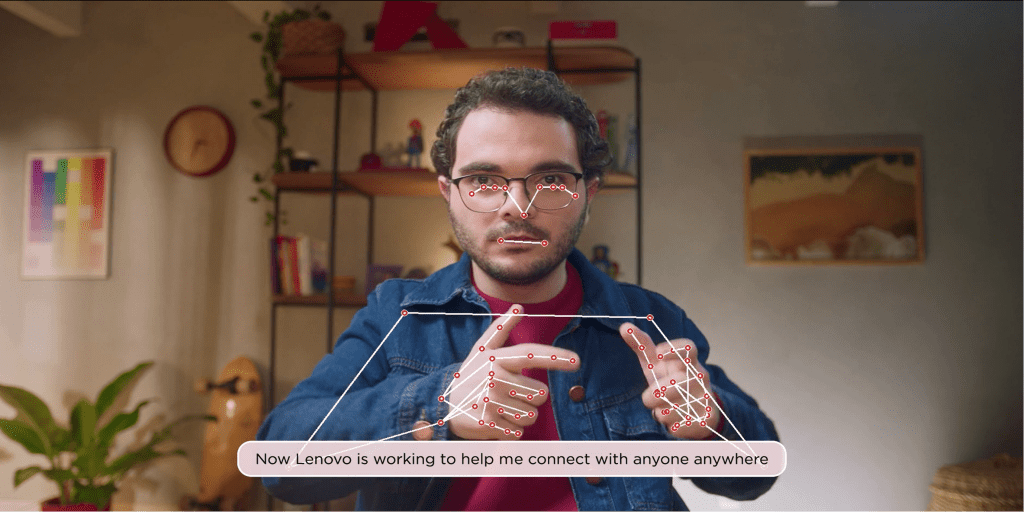

The groundbreaking technology, showcased at Lenovo’s Tech World event, uses computer vision and an original AI engine to interpret Libras, the Brazilian sign language, in real-time.

During Lenovo’s Tech World event, a software developer named Gabriel crossed the stage and warmly greeted chairman and CEO Yuanqing Yang using Libras, the official Brazilian Sign Language. While YY, as he’s known at Lenovo, does not know Libras, he understood Gabriel perfectly—through the power of AI. A camera captured the precise movements of Gabriel’s hands while an original AI engine created a real-time text and voice translation. The language barrier between Gabriel and YY dissolved almost instantly, creating seamless and personal connection.

This quick interaction showcased a groundbreaking accessibility solution pioneered by Lenovo researchers. The new technology is poised to transform the lives of countless individuals, among them the 2.3 million people in Brazil who are deaf or hard of hearing.

“This scalable solution exemplifies the potential to create a new AI-powered paradigm for accessibility and inclusion,” said Hildebrando Lima, Lenovo’s director of research and development in Brazil. “It’s a privilege to do such meaningful work serving our communities in Brazil and deliver Lenovo’s vision of AI for all.”

The R&D team built the AI solution to facilitate interactions where a sign language interpreter may be unavailable—from retail spaces to hospitals—to increase autonomy and create connection.

Unseen on the Tech World stage, Lenovo edge servers provided the computing power needed to run the AI and interpret the dense data captured as Gabriel signed a greeting. While cloud computation is an option, the edge servers provide greater speed and reliability at the precise location the AI is needed.

The demonstration was partly a proof of concept—especially Gabriel’s AI voice, which was selected by his own family from 13 custom options—but the underlying technology is quite mature after four years of development. Already, dozens of deaf and hard of hearing individuals using Libras contributed thousands of hours of anonymized video data to build the training set and advance the AI.

Core accessibility R&D

During an internal team discussion at Lenovo in 2019, a software developer fluent in Libras pointed out many day-to-day accessibility issues, and she challenged Lenovo to do more to improve independence and quality of life for the deaf community.

“As a company, we are committed to delivering smarter technology for all, and that means prioritizing inclusivity and considering the diversity of our customers and communities,” Lima said. “We embraced the challenge.”

The Lenovo team in Brazil began thinking about developing a solution: a real-time translation chat tool that lets deaf or hard of hearing people sign to a device’s camera while an algorithm performs the instantaneous translation from Libras into written or spoken Portuguese text. Now, thanks to the ubiquity of generative AI and multilingual data sets, translation can be made into many more languages.

However, achieving real-time video capture and translation between languages presents a staggering amount of data—not the least of which are the individual gestures for each word and the syntax of each sentence. Just as regional accents within a spoken language like English can be dramatically different, movements and styles can be distinct to individuals within Libras.

“There are so many hurdles involved with the video capture alone – including the person’s skin color, background color, lighting, clothing, the speed of a signer’s gestures, and hand positions relative to the body – to name just a few,” Lima said. “On top of that, not every camera has the same level of depth perception.”

To tackle the data challenge, Lenovo collaborated with Brazilian innovation center CESAR, sharing expertise on capturing and cataloguing video to lay the foundation for the AI. Lenovo and CESAR have since created a dataset of thousands of Libras videos to train the core algorithm to identify and contextualize individual gestures. Then Lenovo led the way to develop the breakthrough AI at the heart of the solution.

The AI recognizes both hand positions and the digital articulation points of the signer’s fingers. After processing these movements and gestures, the AI can accurately identify the flow of a sentence and rapidly convert the sign language into text.

The team also collaborated with Lenovo’s Product Diversity Office (PDO), whose mission is to ensure Lenovo products work for everyone, regardless of their physical attributes or abilities. The PDO’s inclusive design experts helped to identify areas of potential concern—skin tone, hair style, corrective lenses, and limb differences, for example—and to make sure product testing accounted for these characteristics.

Delivering real, reliable solutions for all

At a recent internal event in Brazil exploring inclusion in Lenovo workspaces, a member of the Lenovo R&D team heard the story of a deaf person who was unable to fully communicate with her parents throughout childhood. She faced major challenges, relying heavily on sign-language interpreters who could not be available all the time—especially at home.

“Imagine being unable to talk easily to your friends or parents for your entire childhood, or with your colleagues at work,” Lima said. “It’s the kind of intimate, family, education, and workplace inclusion scenario where this solution can change so much.”

The Lenovo R&D team emphasized that the solution is not intended to replace more people learning Libras or other sign languages—instead, it bridges existing communication gaps. Beyond that, the AI might actually be used to accelerate learning sign language, using computer vision to track the accuracy of gestures and “instruct” users to make adjustments. Deployed on wearable tech or through augmented reality, people could have immersive learning experiences with the AI acting as coach.

Lima’s R&D team partnered with Lenovo’s Infrastructure Solutions Group to find an edge computing solution. Relying exclusively on the cloud—and consequently very fast Internet speed—works in some but not all instances. Potential users at a hospital or airport, for example, where time is at a premium, would not want to rely on unpredictable connection. Computing at the edge is also in line with Lenovo’s pocket-to-cloud portfolio that brings AI to the source of the data and into the hands of users.

The next step is to scale the project beyond internal testing. More data points will be needed to roll out a real-time sign language translation interface at scale. The team is exploring self-learning algorithms and other technology to accelerate development, especially as the user base and data sets grow.

Lenovo is also exploring ways to tailor the translation solution to specific industry verticals, such as finance or retail, as the data sets can be more finely tuned and optimized to provide an ideal user experience. As the solution develops and inspires more inclusive technology, the more than 430 million deaf and hard of hearing individuals around the globe may feel the profound potential of AI.